Gabriel Huang (he/him)

PhD, Mila & University of Montreal

Research Scientist, ServiceNow

I am a research scientist at ServiceNow working on the reliability and security of autonomous AI agents with access to APIs and UIs. My collaborators and I have released DoomArena, a framework for agentic security. Previously, we worked on synthetic dialogue generation for AI agent distillation and evaluation, simulated users, company APIs and policies, with an emphasis on rigourous evaluation of our systems using both human labelers and LLM-as-a-Judge, resulting in the release of the TapeAgents agentic framework. I did my PhD at Mila Quebec AI Institute, Université de Montréal with Simon Lacoste-Julien. I interned at Google Research working on multimodal pretraining for video captioning. I also hold two MSc. from Ecole Normale Supérieure and CentraleSupélec, where I specialized in machine learning, computer vision and statistics.

Publications

See google scholar for my most recent publications.

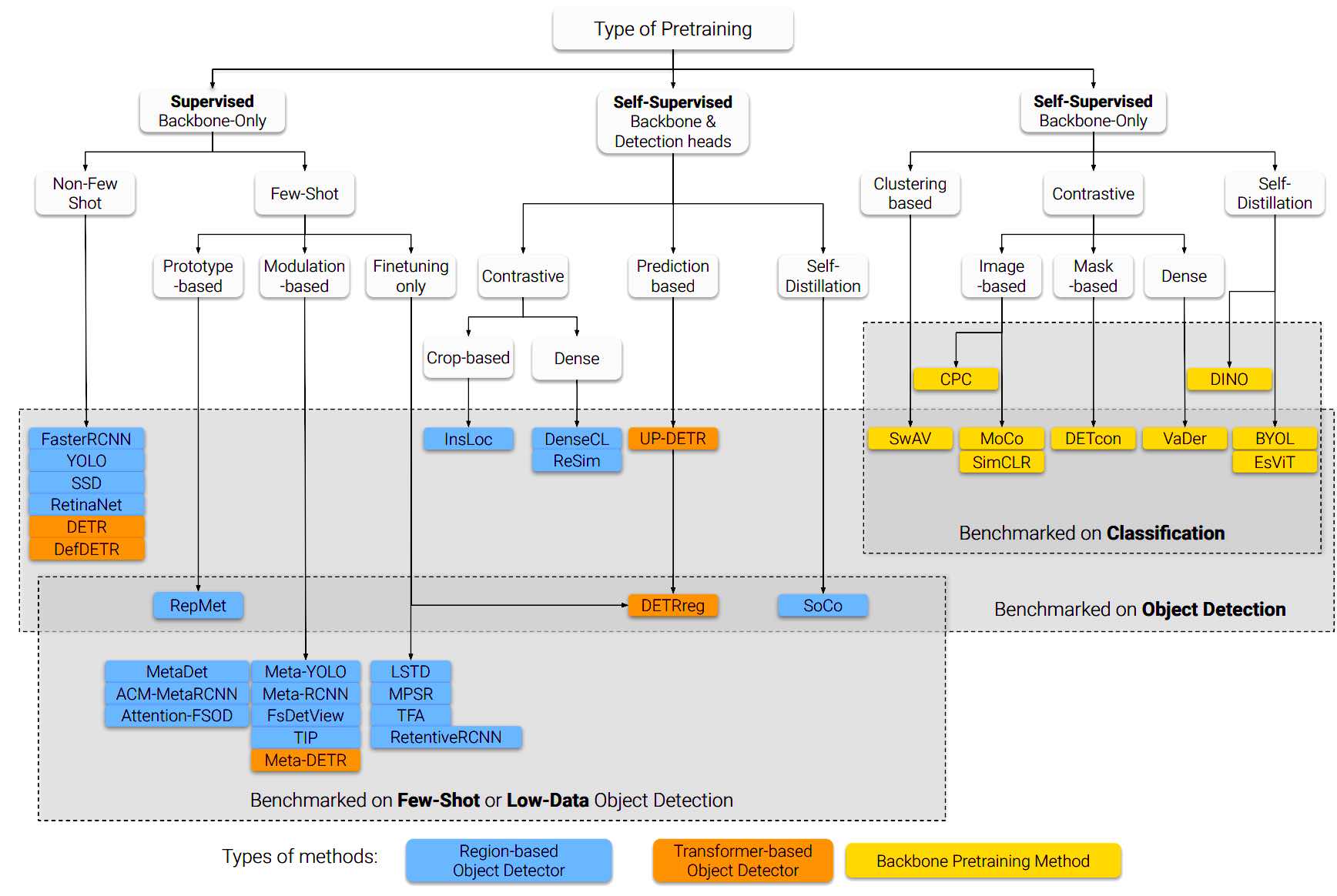

Gabriel Huang, Issam Laradji, David Vazquez, Simon Lacoste-Julien, Pau Rodriguez

Submitted to IEEE TPAMI.

Namyeong Kwon, Hwidong Na, Gabriel Huang, Simon Lacoste-Julien

ICLR'21 paper.

This paper introduces Centroid Networks for Few-shot Clustering and Unsupervised Few-shot Classification

Gabriel Huang, Hugo Larochelle, Simon Lacoste-Julien

ICLR'19 workshop.

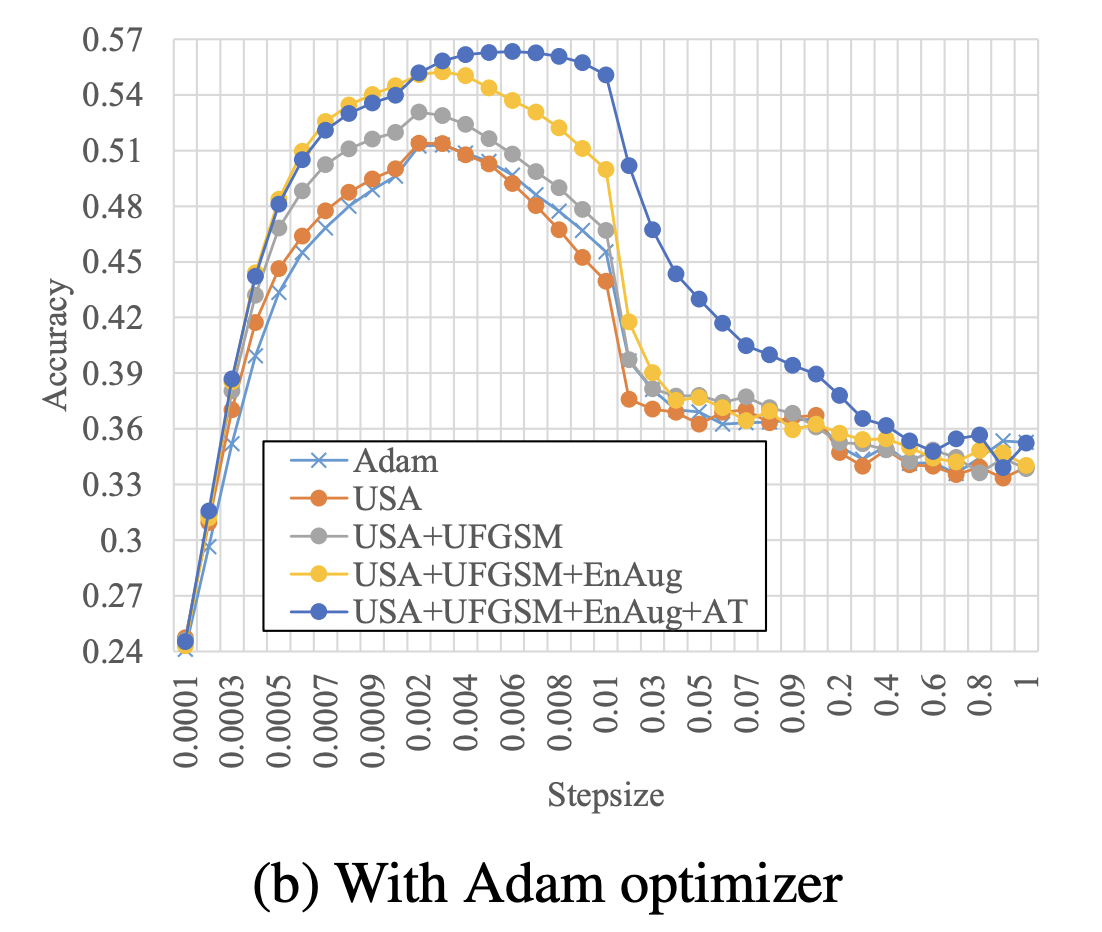

Gauthier Gidel, Reyhane Askari Hemmat, Mohammad Pezeshki, Gabriel Huang, Rémi Lepriol, Simon Lacoste-Julien, Ioannis Mitliagkas.

AISTATS 2019

Gabriel Huang, Hugo Berard, Ahmed Touati, Gauthier Gidel, Pascal Vincent, Simon Lacoste-Julien.

ICML'17 Workshop, ICLR'18 Workshop, Montreal AI Symposium 2018, Submitted to JMLR

Edouard Oyallon, Sergey Zagoruyko, Gabriel Huang, Nikos Komodakis, Simon Lacoste-Julien, Matthew Blaschko, Eugene Belilovsky.

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 2018

Negative Momentum

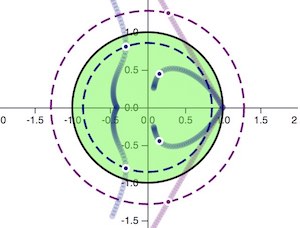

Below is an interactive visualization of our paper Negative Momentum for

Improved Game Dynamics:

(a) Learning rate (lr) and momentum (beta) hyperparameters.

(b) Resulting eigenvalues in the complex plane for SGD and SGD+momentum.

There is convergence if and only if all eigenvalues are inside the convergence ball (green).

Try to find the

hyperparameters for convergence.

SGD without momentum: using ,

eigenvalues are the convergence ball →

SGD with momentum: using

and momentum ,

eigenvalues are the convergence ball →

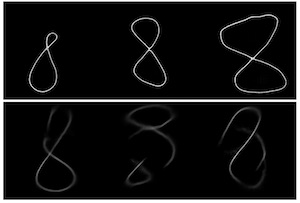

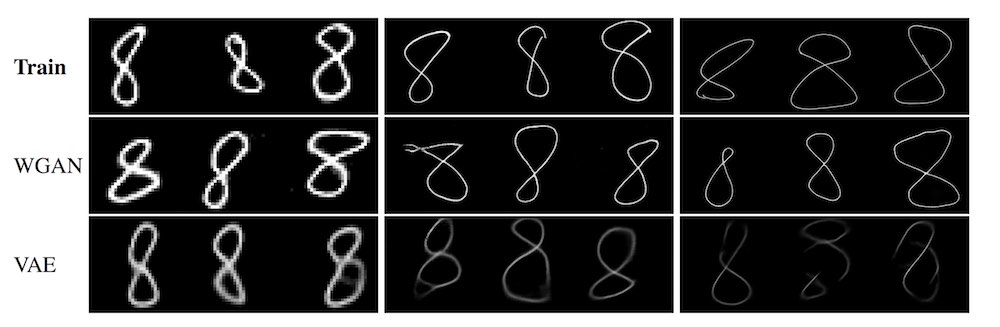

Thin-8 dataset

The Thin-8 dataset consists of 1585 grayscale handwritten images of the digit 8, with resolution

512x512.

16 people were asked to draw the digit 8 about 100 times using a pen on a tablet PC running Microsoft Windows.

It was collected in October 2017 at the University of Montreal.

Download Thin-8 dataset here

If you use the Thin-8 dataset, please cite our paper :

@article{huang2018parametric,

title={Parametric Adversarial Divergences are Good Task Losses for Generative Modeling},

author={Huang, Gabriel and Berard, Hugo and Touati, Ahmed and Gidel, Gauthier and Vincent, Pascal and Lacoste-Julien, Simon},

journal={arXiv preprint arXiv:1708.02511},

year={2017}

}Thanks to Alex, Akram, Aristide, David, Dendi, Eugene, Jae, Joao, Liam, Rémi, Rosemary, Shawn, Sina, and Xing for scribbling all those samples!

Twitter Feed

Tweets by GabrielHuang9Contact

Email: gbxhuang@gmail.com

In person: Mila, 6666 St-Urbain, #200, Montreal, QC, H2S 3H1, Canada